Use PySpark withColumnRenamed() to rename a DataFrame column, we often need to rename one column or multiple (or all) columns on PySpark DataFrame, you can do this in several ways. When columns are nested it becomes complicated.

Since DataFrame’s are an immutable collection, you can’t rename or update a column instead when using withColumnRenamed() it creates a new DataFrame with updated column names, In this PySpark article, I will cover different ways to rename columns with several use cases like rename nested column, all columns, selected multiple columns with Python/PySpark examples.

First, let’s create our data set to work with.

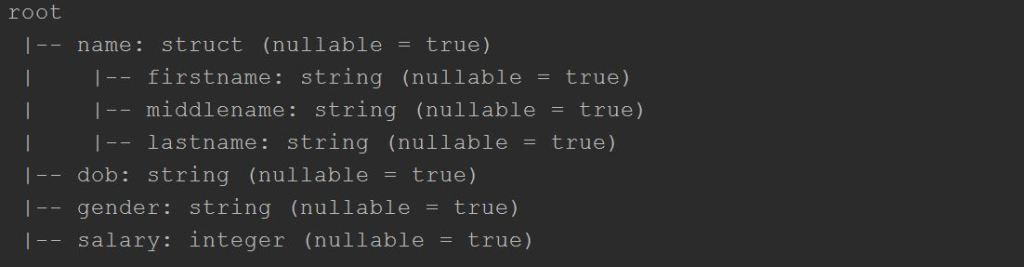

Our base schema with nested structure.

Let’s create the DataFrame by using parallelize and provide the above schema.

Below is our schema structure. I am not printing data here as it is not necessary for our examples. This schema has a nested structure.

1. PySpark withColumnRenamed – To Rename DataFrame Column Name

PySpark has a withColumnRenamed() function on DataFrame to change a column name. This is the most straight-forward approach; this function takes two parameters; the first is your existing column name and the second is the new column name you wish for.

PySpark withColumnRenamed() Syntax:

existingName – The existing column name you want to change

newName – New name of the column

Returns a new DataFrame with a column renamed.

Example:

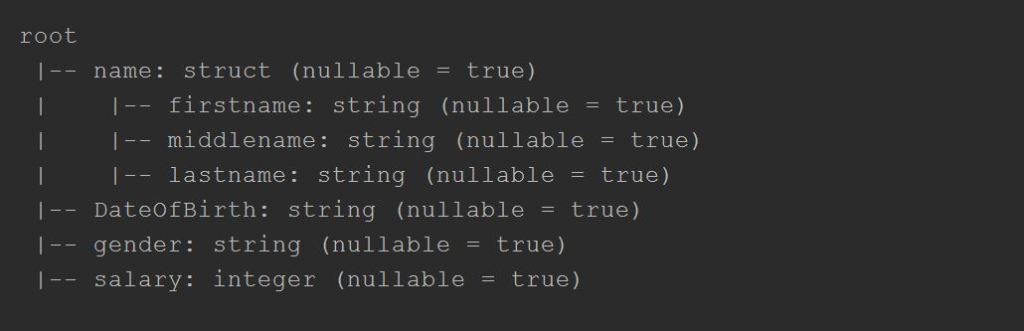

The above statement changes column “dob” to “DateOfBirth” on PySpark DataFrame.

Note that withColumnRenamed function returns a new DataFrame and doesn’t modify the current DataFrame.

2. PySpark withColumnRenamed – To Rename Multiple Columns

To change multiple column names, we should chain withColumnRenamed functions as shown below. You can also store all columns to rename in a list and loop through to rename all columns, I will leave this to you to explore.

This creates a new DataFrame “df2” after renaming dob and salary columns.

3. Using PySpark StructType – To Rename a Nested Column in Dataframe

Changing a column name on nested data is not straight forward and we can do this by creating a new schema with new DataFrame columns using StructType and use it using cast function as shown below.

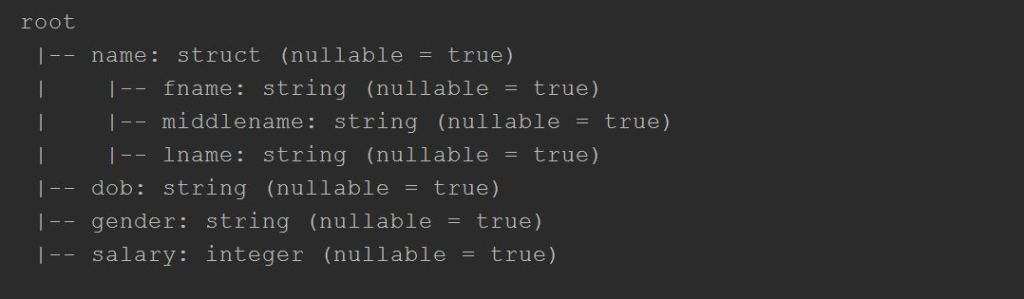

This statement renames firstname to fname and lastname to lname within name structure.

4. Using select and alias – To Rename Nested Elements

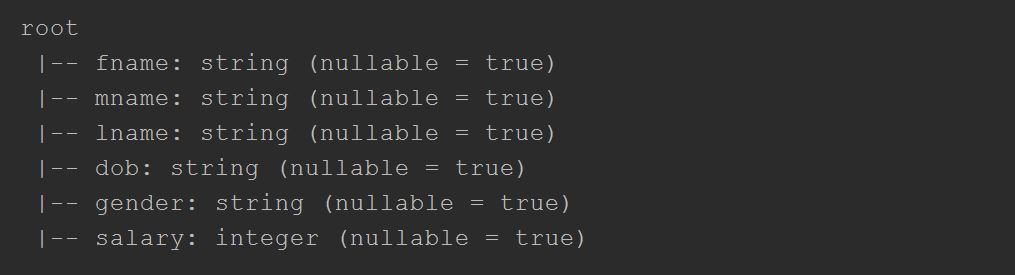

Let’s see another way to change nested columns by transposing the structure to flat.

5. Using PySpark DataFrame withColumn – To Rename Nested Columns

When you have nested columns on PySpark DatFrame and if you want to rename it, use withColumn on a data frame object to create a new column from an existing and we will need to drop the existing column. Below example creates a “fname” column from “name.firstname” and drops the “name” column

6. Using a dictionary and withColumnRenamed()

Another way to change all column names on Dataframe is to use col() function.

7. Using col().alias() with a Dictionary and List Comprehension

df_initial = spark.read.load('/mnt/datalake/bronze/testData')

rename_dict = {

'FName':'FirstName',

'LName':'LastName',

'DOB':'BirthDate'

}

df_renamed = df_initial \

.select([col(c).alias(rename_dict.get(c, c)) for c in df_initial.columns])8. Using toDF() – To Change all Columns in a PySpark DataFrame

When we have data in a flat structure (without nested), use toDF() with a new schema to change all column names.

More Examples:

Conclusion:

In this article, we have learned about different ways to rename all, single, multiple, and nested columns on PySpark DataFrame.