Introduction to PySpark when Function

PySpark “when” a function used with PySpark in DataFrame to derive a column in a Spark DataFrame. It is also used to update an existing column in a DataFrame. Any existing column in a DataFrame can be updated with the when function based on certain conditions needed.

PySpark DataFrame uses SQL statements to work with the data. And “when” is a SQL function used to restructure the DataFrame in spark. We can add our own condition in PySpark and use the when statement to use further.

When is a SQL function with Column as the return Type?

Syntax for PySpark when Function

The syntax for the PYSPARK WHEN function is:-

Dataframe.select(“condition”).when(“condition”)

Dataframe.withColumn(“condition”).when(“condition”)Code:

b = a.withColumn("condition1", when(col("condition2")==,"")).show()ScreenShot:

Working of PySpark when Function

Let us see somehow the When function works in PySpark:-

When is a spark function so it is used with the help of the Import function:

Import org.apache.spark.sql.function.whenWhen the function first checks with the condition for a DataFrame and then segregates the data accordingly we can alter an existing column in a DataFrame or else add a new column with the help of the when function.

We can also use the case statement as well as the SQL function otherwise with When where the condition that doesn’t satisfy falls there.

The data can also be segregated based on case statement where case followed with When filters the data out.

When takes up the value checks them against the condition and then outputs the new column based on the value satisfied. It is similar to an if then clause in SQL. We can have multiple when statement with PySpark DataFrame.

We can alter or update any column PySpark DataFrame based on the condition required. A conditional statement if satisfied or not works on the data frame accordingly.

Example of PySpark when Function

Let us see some Examples of how the PYSPARK WHEN function works:

Example #1

Create a DataFrame in PYSPARK:-

Let’s first create a DataFrame in Python.

CreateDataFrame is used to create a DF in Python.

a= spark.createDataFrame(["SAM","JOHN","AND","ROBIN","ANAND"], "string").toDF("Name").show()Let’s check and introduce a new column with the condition satisfied by the DataFrame.

The With Column is used to introduce a new column in DataFrame and the condition satisfied by when clause works accordingly.

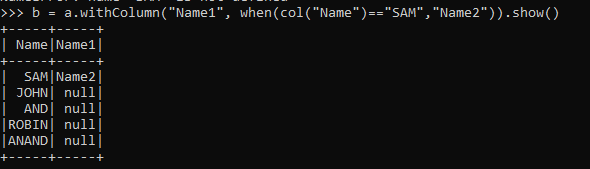

b = a.withColumn("Name1", when(col("Name")=="SAM","Name2")).show()The condition is satisfied and the column is introduced in PySpark.

b = a.withColumn("Name1", when(col("Name")=="SAM","Name2")).show()Screenshot:

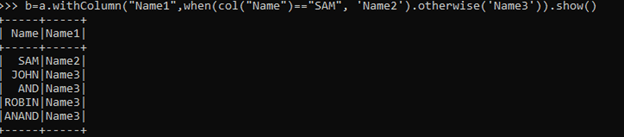

We can also use the otherwise function that fills the columns for the conditions that don’t satisfy the condition.

Code:

b=a.withColumn("Name1",when(col("Name")=="SAM", 'Name2').otherwise('Name3')).show()Code Snippet:

We can also use the selectEpxr to select the columns in a DataFrame, multiple case statement can also be used and the values can be put over the same.

Let us see that with an example:

Let’s create a DataFrame with the same value as above.

Code:

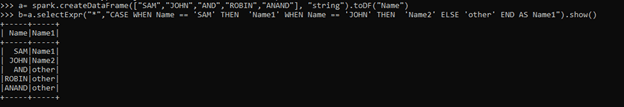

a= spark.createDataFrame(["SAM","JOHN","AND","ROBIN","ANAND"], "string").toDF("Name").show()The selectExpr will select the elements in the Data Frame and we can use multiple case statements with when that checks for the condition and provides the value with it.

Code:

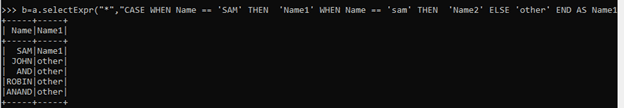

b=a.selectExpr("*","CASE WHEN Name == 'SAM' THEN 'Name1' WHEN Name == 'JOHN' THEN 'Name2' ELSE 'other' END AS Name1").show()Example #2

It is not necessarily important to satisfy the multiple statements. It can evaluate only to True statements and then can leave the False one apart.

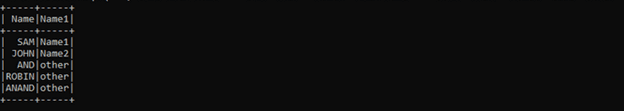

b=a.selectExpr("*","CASE WHEN Name == 'SAM' THEN 'Name1' WHEN Name == 'sam' THEN 'Name2' ELSE 'other' END AS Name1Here the condition that satisfies is put and the one with false is left behind.

Code Snapshot:

So the output will only be applied only to True Conditions.

This will check for the condition over the DataFrame and then assigns the value associated with it.

The value that satisfies is put up and the one with not is filled then.

Code Snapshot:

We can also use operators with the When statement and create a condition within a DataFrame.

From the above example, we saw the use of the When function with Pyspark

Note:

- When can be used in select operation as well as withColumn function?

- When is a SQL function so the operation is similar to SQL When?

- When can be used with Spark Data Frame?

- When can be used with multiple case statements?