Introduction to PySpark SQL Types

PySpark sql.types is a class in the PySpark model that is used to define all the data types in the PySpark data model that is used. The data frame, when created, has a data type that is defined which takes care of the type of data needed while creation. The SQL Types can be considered as the base class for defining these data Types in the PySpark data model.

A package pyspark.sql.types. DataType is defined in PySpark that takes care of all the data type models needed to be defined and used. There are various data types such as String Type, Numeric Type, Byte Type that are defined in this package which can be used for defining the data model over PySpark. In this article, we will try to analyze the various ways of using the PySpark SQL Types operation PySpark.

Syntax of PySprk SQL Types

The syntax is as follows:

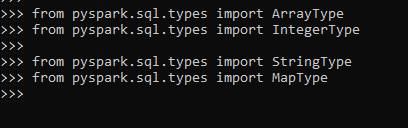

from pyspark.sql.types import ArrayType

from pyspark.sql.types import IntegerType

from pyspark.sql.types import StringType

from pyspark.sql.types import MapTypeThese are the import Type of SQL that is used in PySpark SQL Types.

Screenshot:

Working of SQL Types in PySpark

Let us see how SQL Types works in PySpark:

- The SQL Types in PySpark defines the type of value that needs to be stored in the PySpark data model. Each Data Type is defined by some size range that defines the element size that can be stored.

- The import function pyspark.sql.types provide with the Type of data that needs to be imported and defines the data type regarding this. Some of the data types are Binary data type, Numeric Data Type.

- Once the type of data is defined, it makes the analysis of data easier, and certain data type-related operations can be easily done with regard to that.

- Every time a variable is created or every time when columns are created, a data type is needed for this column and a variable that is done by PySpark SQL types. We can also convert these data types once done based on our requirement and can function the data model properly.

Let’s check the creation and working of PySpark SQL Types with some coding examples.

Examples of PySpark SQL Types

Let us see some examples of how PySpark SQL Types operation works. Some types are a subclass of data types.

ArrayType

This SQL type can be achieved by importing the ArrayType from the package

from pyspark.sql.types import ArrayTypeThere are some methods also that defines the type of elements in the ArrayType as:

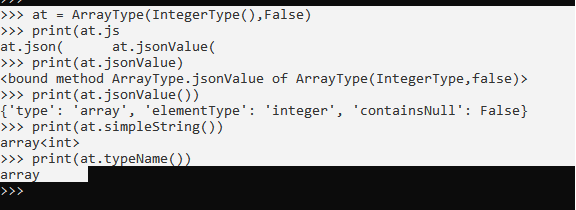

at = ArrayType(IntegerType(),False)

print(at.jsonValue())

print(at.simpleString())

print(at.typeName())This ArrayType has some method that is defined for the SQL Types.

Screenshot:

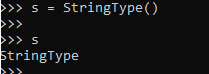

StringType()

This data type is used to represent StringValues.

s = StringType()

sThis defines the type as String type. All the string type methods are defined over this SQL Type.

Screenshot:

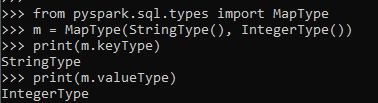

MapType

It represents the key-value pair in the Data frame. A key and value pair is set to define the MAP Type.

from pyspark.sql.types import MapType

m = MapType(StringType(), IntegerType())

print(m.keyType)

print(m.valueType)Screenshot:

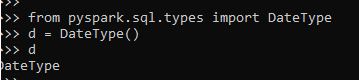

DateType

This is used to represent Data in the Data Frame of a PySpark. All the data type methods can be done once converting it into DateType.

from pyspark.sql.types import DateType

d = DateType()

dScreenshot:

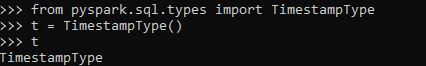

TimestampType

This represents the data frame of the type Time Stamp.

The timestamp type() is used to get the timestamp of SQL type.

This is of the format:- yyyy-mm-dd HH:mm: ss. ssss

from pyspark.sql.types import TimestampType

t = TimestampType()

tScreenshot:

There is the method by which a SQL TYPES can be created to Data Frame in PySpark.

These are some of the Examples of PySpark SQL TYPES in PySpark.

Note

1. PySpark SQL Types are the data types needed in the PySpark data model.

2. It has a package that imports all the types of data needed.

3. It has a limited range for the type of data needed.

4. PySpark SQL Types are used to create a data frame with a specific type.

5. It has the base class Data Type that contains all the base class SQL types elements.