Introduction to PySpark Round Function

PySpark Round is a function in PySpark that is used to round a column in a PySpark data frame. The PySpark round rounds the value to scale decimal place using the rounding mode. PySpark Round is having various Round function that is used for the operation. The round-up, Round down are some of the functions that are used in PySpark for rounding up the value.

The round function is an important function in PySpark as it rounds up the value to the nearest value based on the decimal function. The return type of PySpark Round is the floating-point number. It has various functions that can be used for rounding up the data based on that we decide the parameter about it needs to be round up.

Let us try to see about PySpark Round in some more detail.

Syntax for PySpark Round Function

The syntax for PySpark Round function is:

from pyspark.sql.functions import round, col

b.select("*",round("ID",2)).show()b: The Data Frame used for the round function.

- select(): The select operation to be used in.

The syntax used to select all the elements from the Data Frame.

- Round(): The Round Function to be used

It takes on two-parameter:

The Column name and the digit allowed the number to which round-up is possible.

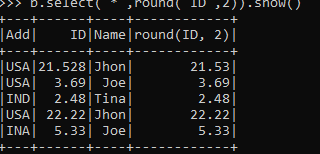

Screenshot:

Working of PySpark Round Function

The round operation works on the data frame column where it takes the column values as the parameter and iterates over the column values to round up the items. It accepts one parameter from that we can decide the position to which the rounding off needs to be done. If no parameters are given it will round up to the nearest value and return the data frame out of it.

The round function is an important function that is used when it comes to data rounding as the round-up data can be collected over the new Data Frame or the existing can be selected out of it. It is an iterative approach model that iterates over all the values of a column and applies the function to each and every model. We can either use the round-off function, round up or round down to round up data elements in a data frame.

Let’s check the creation and usage with some coding examples.

Examples of PySpark Round Function

Let us see some examples of how PySpark Round operation works. Let’s start by creating simple data in PySpark.

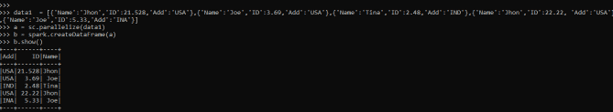

data1 = [{'Name':'Jhon','ID':21.528,'Add':'USA'},{'Name':'Joe','ID':3.69,'Add':'USA'},{'Name':'Tina','ID':2.48,'Add':'IND'},{'Name':'Jhon','ID':22.22, 'Add':'USA'},{'Name':'Joe','ID':5.33,'Add':'INA'}]A sample data is created with Name, ID, and ADD as the field.

a = sc.parallelize(data1)RDD is created using sc. parallelize.

b = spark.createDataFrame(a)

b.show()Created Data Frame using Spark.createDataFrame.

Screenshot:

Let us round the value of the ID and use the round function on it.

b.select("*",round("ID")).show()This selects the ID column of the data frame and works over each and every element rounding up the value out of it. A new column is generated from the data frame which can be used further for analysis.

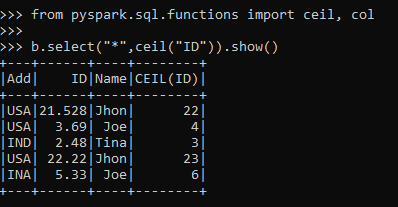

The ceil function is a PySpark function that is a Roundup function that takes the column value and rounds up the column value with a new column in the PySpark data frame.

from pyspark.sql.functions import ceil, col

b.select("*",ceil("ID")).show()Screenshot:

This is an example of a Round-Up Function.

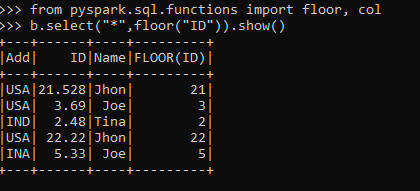

The floor function is a PySpark function that is a Round down function that takes the column value and rounds down the column value with a new column in the PySpark data frame.

from pyspark.sql.functions import floor, col

b.select("*",floor("ID")).show()This is an example of the Round Down Function.

Screenshot:

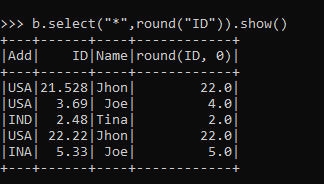

The round function is a PySpark function that Rounds the column value to the nearest integer with a new column in the PySpark data frame.

b.select("*",round("ID")).show()Screenshot:

The round-off function takes up the parameter and rounds it up to the nearest decimal place with a new column in the PySpark data frame.

b.select("*",round("ID",2)).show()Screenshot:

These are some of the Examples of PySpark Round Function in PySpark.

Note:

- PySpark Round is a ROUNDING function in PySpark.

- PySpark Round rounds up the data to a given value in the Data frame.

- PySpark Round can be used to round up, down the values of the Data frame.

- PySpark Round function results can be used to create new columns in the Data frame.

- PySpark Round function uses the function ceil and floor for rounding up the value.