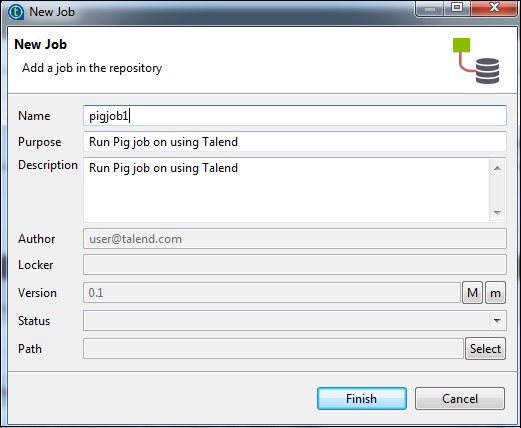

Creating a Talend Pig Job

In this section, let us learn how to run a Pig job on Talend. Here, we will process NYSE data to find out average stock volume of IBM.

For this, right click Job Design and create a new job – pigjob. Mention the details of the job and click Finish.

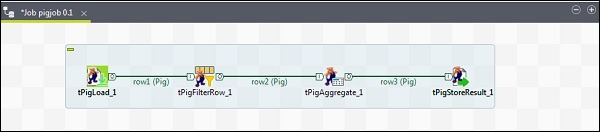

Adding Components to Pig Job

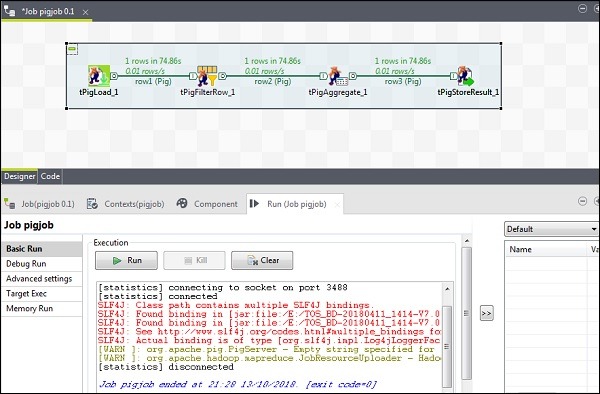

To add components to Pig job, drag and drop four Talend components: tPigLoad, tPigFilterRow, tPigAggregate, tPigStoreResult, from the pallet to designer window.

Then, right click tPigLoad and create Pig Combine line to tPigFilterRow. Next, right click tPigFilterRow and create Pig Combine line to tPigAggregate. Right click tPigAggregate and create Pig combine line to tPigStoreResult.

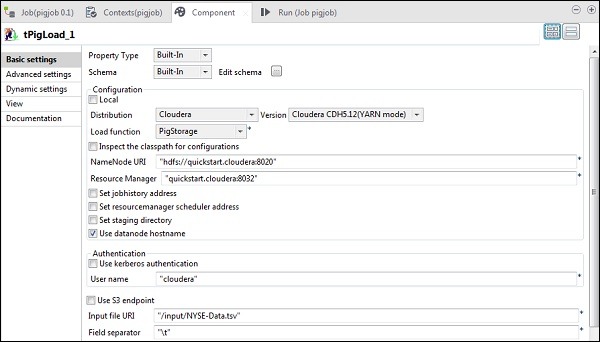

Configuring Components and Transformations

In tPigLoad, mention the distribution as cloudera and the version of cloudera. Note that Namenode URI should be “hdfs://quickstart.cloudera:8020” and Resource Manager should be “quickstart.cloudera:8020”. Also, the username should be “cloudera”.

In the Input file URI, give the path of your NYSE input file to the pig job. Note that this input file should be present on HDFS.

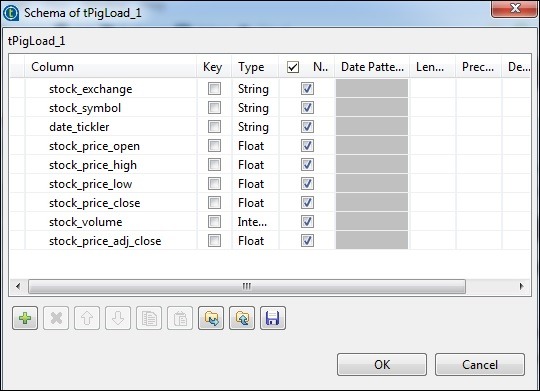

Click edit schema, add the columns and its type as shown below.

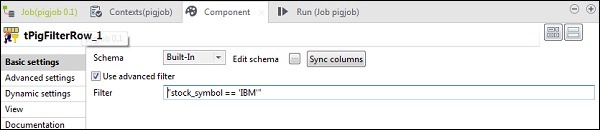

In tPigFilterRow, select the “Use advanced filter” option and put “stock_symbol = = ‘IBM’” in the Filter option.

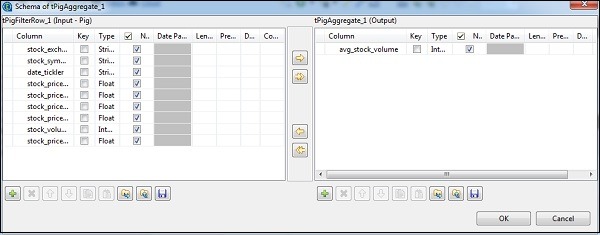

In tAggregateRow, click edit schema and add avg_stock_volume column in output as shown below.

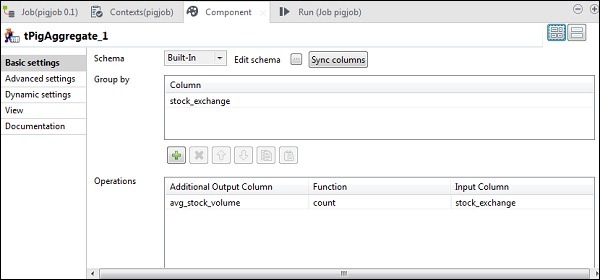

Now, put stock_exchange column in Group by option. Add avg_stock_volume column in Operations field with count Function and stock_exchange as Input Column.

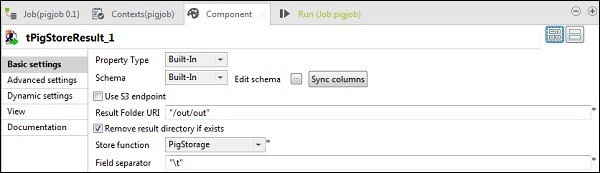

In tPigStoreResult, give the output path in Result Folder URI where you want to store the result of Pig job. Select store function as PigStorage and field separator (not mandatory) as “t”.

Executing the Pig Job

Now click on Run to execute your Pig job. (Ignore the warnings)

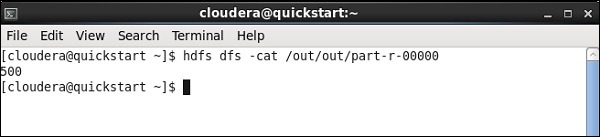

Once the job finishes, go and the check your output at the HDFS path you mentioned for storing the pig job result. The average stock volume of IBM is 500.